Usuário(a):Wcris~ptwiki/quanta book

Predefinição:No footnotes Predefinição:Quantum In quantum mechanics, the EPR paradox (or Einstein–Podolsky–Rosen paradox) is a thought experiment which challenged long-held ideas about the relation between the observed values of physical quantities and the values that can be accounted for by a physical theory. "EPR" stands for Einstein, Podolsky, and Rosen, who introduced the thought experiment in a 1935 paper to argue that quantum mechanics is not a complete physical theory.[1][2]

According to its authors the EPR experiment yields a dichotomy. Either

- The result of a measurement performed on one part A of a quantum system has a non-local effect on the physical reality of another distant part B, in the sense that quantum mechanics can predict outcomes of some measurements carried out at B; or...

- Quantum mechanics is incomplete in the sense that some element of physical reality corresponding to B cannot be accounted for by quantum mechanics (that is, some extra variable is needed to account for it.)

As it was shown later by Bell one cannot introduce the notion of "elements of reality" without affecting the predictions of the theory. That is, one cannot complete quantum mechanics with these "elements", because this automatically leads to some logical contradictions.

Einstein never accepted quantum mechanics as a "real" and complete theory, struggling to the end of his life for an interpretation that could comply with relativity without complying with the Heisenberg Uncertainty Principle. As he once said: "God does not play dice", skeptically referring to the Copenhagen Interpretation of quantum mechanics which says there exists no objective physical reality other than that which is revealed through measurement and observation.

The EPR paradox is a paradox in the following sense: if one adds to quantum mechanics some seemingly reasonable (but actually wrong, or questionable as a whole) conditions (referred to as locality) — realism (not to be confused with philosophical realism), counterfactual definiteness, and completeness (see Bell inequality and Bell test experiments) — then one obtains a contradiction. However, quantum mechanics by itself does not appear to be internally inconsistent, nor — as it turns out — does it contradict relativity. As a result of further theoretical and experimental developments since the original EPR paper, most physicists today regard the EPR paradox as an illustration of how quantum mechanics violates classical intuitions.

Quantum mechanics and its interpretation[editar | editar código-fonte]

During the twentieth century, quantum theory proved to be a successful theory, which describes the physical reality of the mesoscopic and microscopic world.

Quantum mechanics was developed with the aim of describing atoms and to explain the observed spectral lines in a measurement apparatus. The fact that quantum theory allows for an accurate description of reality is clear from many physical experiments and has probably never been seriously disputed. Interpretations of quantum phenomena are another story.

The question of how to interpret the mathematical formulation of quantum mechanics has given rise to a variety of different answers from people of different philosophical backgrounds.

Quantum theory and quantum mechanics do not account for single measurement outcomes in a deterministic way. According to an accepted interpretation of quantum mechanics known as the Copenhagen interpretation, a measurement causes an instantaneous collapse of the wave function describing the quantum system into an eigenstate of the observable that was measured.

The most prominent opponent of the Copenhagen interpretation was Albert Einstein. Einstein did not believe in the idea of genuine randomness in nature, the main argument in the Copenhagen interpretation. In his view, quantum mechanics is incomplete and suggests that there had to be 'hidden' variables responsible for random measurement results.

The famous paper "Can Quantum-Mechanical Description of Physical Reality Be Considered Complete?"[4], authored by Einstein, Podolsky and Rosen in 1935, condensed the philosophical discussion into a physical argument. They claim that given a specific experiment, in which the outcome of a measurement could be known before the measurement takes place, there must exist something in the real world, an "element of reality", which determines the measurement outcome. They postulate that these elements of reality are local, in the sense that they belong to a certain point in spacetime. This element may only be influenced by events which are located in the backward light cone of this point in spacetime. Even though these claims sound reasonable and convincing, they are founded on assumptions about nature which constitute what is now known as local realism.

Though the EPR paper has often been taken as an exact expression of Einstein's views, it was primarily authored by Podolsky, based on discussions at the Institute for Advanced Study with Einstein and Rosen. Einstein later expressed to Erwin Schrödinger that "It did not come out as well as I had originally wanted; rather, the essential thing was, so to speak, smothered by the formalism."[3]

Description of the paradox[editar | editar código-fonte]

The EPR paradox draws on a phenomenon predicted by quantum mechanics, known as quantum entanglement, to show that measurements performed on spatially separated parts of a quantum system can apparently have an instantaneous influence on one another.

This effect is now known as "nonlocal behavior" (or colloquially as "quantum weirdness" or "spooky action at a distance").

Simple version[editar | editar código-fonte]

Before delving into the complicated logic that leads to the 'paradox', it is perhaps worth mentioning the simple version of the argument, as described by Greene and others, which Einstein used to show that 'hidden variables' must exist.

Two electrons are emitted from a source, by pion decay, so that their spins are opposite; one electron’s spin about any axis is the negative of the other's. Also, due to uncertainty, making a measurement of a particle’s spin about one axis disturbs the particle so you now can’t measure its spin about any other axis.

Now say you measure one electron’s spin about the x-axis. This automatically tells you the other electron’s spin about the x-axis. Since you’ve done the measurement without disturbing the other electron in any way, it can’t be that the other electron "only came to have that state when you measured it", because you didn’t measure it! It must have had that spin all along. Also (although you can’t actually do it now that you’ve disturbed the electron), you could have taken the measurement about any other axis. So it follows that the other electron also had a definite spin about any other axis – much more information than the particle is capable of holding, and a "hidden variable" according to EPR.

Measurements on an entangled state[editar | editar código-fonte]

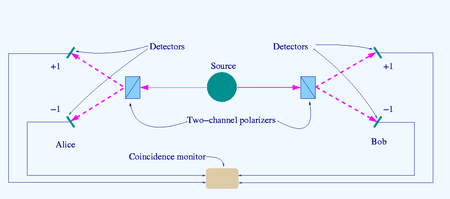

We have a source that emits pairs of electrons, with one electron sent to destination A, where there is an observer named Alice, and another sent to destination B, where there is an observer named Bob. According to quantum mechanics, we can arrange our source so that each emitted electron pair occupies a quantum state called a spin singlet. This can be viewed as a quantum superposition of two states, which we call state I and state II. In state I, electron A has spin pointing upward along the z-axis (+z) and electron B has spin pointing downward along the z-axis (-z). In state II, electron A has spin -z and electron B has spin +z. Therefore, it is impossible to associate either electron in the spin singlet with a state of definite spin. The electrons are thus said to be entangled.

Alice now measures the spin along the z-axis. She can obtain one of two possible outcomes: +z or -z. Suppose she gets +z. According to quantum mechanics, the quantum state of the system collapses into state I. (Different interpretations of quantum mechanics have different ways of saying this, but the basic result is the same.) The quantum state determines the probable outcomes of any measurement performed on the system. In this case, if Bob subsequently measures spin along the z-axis, he will obtain -z with 100% probability. Similarly, if Alice gets -z, Bob will get +z.

There is, of course, nothing special about our choice of the z-axis. For instance, suppose that Alice and Bob now decide to measure spin along the x-axis, according to quantum mechanics, the spin singlet state may equally well be expressed as a superposition of spin states pointing in the x direction. We'll call these states Ia and IIa. In state Ia, Alice's electron has spin +x and Bob's electron has spin -x. In state IIa, Alice's electron has spin -x and Bob's electron has spin +x. Therefore, if Alice measures +x, the system collapses into Ia, and Bob will get -x. If Alice measures -x, the system collapses into IIa, and Bob will get +x.

In quantum mechanics, the x-spin and z-spin are "incompatible observables", which means that there is a Heisenberg uncertainty principle operating between them: a quantum state cannot possess a definite value for both variables. Suppose Alice measures the z-spin and obtains +z, so that the quantum state collapses into state I. Now, instead of measuring the z-spin as well, Bob measures the x-spin. According to quantum mechanics, when the system is in state I, Bob's x-spin measurement will have a 50% probability of producing +x and a 50% probability of -x. Furthermore, it is fundamentally impossible to predict which outcome will appear until Bob actually performs the measurement.

Here is the crux of the matter. You might imagine that, when Bob measures the x-spin of his particle, he would get an answer with absolute certainty, since prior to this he hasn't disturbed his electron at all. But, as described above, Bob's electron has a 50% probability of producing +x and a 50% probability of -x - random behaviour, not certain. Bob's electron knows that Alice's electron has been measured, and its z-spin detected, and hence B's z-spin calculated, so its x-spin is 'out of bounds'.

Put another way, how does Bob's electron know, at the same time, which way to point if Alice decides (based on information unavailable to Bob) to measure x (i.e. be the opposite of Alice's electron's spin about the x-axis) and also how to point if Alice measures z (i.e. behave randomly), since it is only supposed to know one thing at a time? Using the usual Copenhagen interpretation rules that say the wave function "collapses" at the time of measurement, there must be action at a distance (entanglement) or the electron must know more than it is supposed to (hidden variables).

In case the explanation above is confusing, here is the paradox summed up;

Two electrons are emitted, shoot off and are measured later. Whatever axis their spins are measured along, they are always found to be opposite. This can only be explained if the electrons are linked in some way. Either they were created with a definite (opposite) spin about every axis - a "hidden variable" argument - or they are linked so that one electron knows what axis the other is having its spin measured along, and becomes its opposite about that one axis - an "entanglement" argument. Moreover, if the two electrons have their spins measured about different axes, once A's spin has been measured about the x-axis (and B's spin about the x-axis deduced), B's spin about the y-axis will no longer be certain, as if it knows that the measurement has taken place. Either that, or it has a definite spin already, which gives it a spin about a second axis - a hidden variable.

Incidentally, although we have used spin as an example, many types of physical quantities — what quantum mechanics refers to as "observables" — can be used to produce quantum entanglement. The original EPR paper used momentum for the observable. Experimental realizations of the EPR scenario often use photon polarization, because polarized photons are easy to prepare and measure.

Reality and completeness[editar | editar código-fonte]

We will now introduce two concepts used by Einstein, Podolsky, and Rosen (EPR), which are crucial to their attack on quantum mechanics: (i) the elements of physical reality and (ii) the completeness of a physical theory.

The authors (EPR) did not directly address the philosophical meaning of an "element of physical reality". Instead, they made the assumption that if the value of any physical quantity of a system can be predicted with absolute certainty prior to performing a measurement or otherwise disturbing it, then that quantity corresponds to an element of physical reality. Note that the converse is not assumed to be true; even if there are some "elements of physical reality" whose value cannot be predicted, this will not affect the argument.

Next, EPR defined a "complete physical theory" as one in which every element of physical reality is accounted for. The aim of their paper was to show, using these two definitions, that quantum mechanics is not a complete physical theory.

Let us see how these concepts apply to the above thought experiment. Suppose Alice decides to measure the value of spin along the z-axis (we'll call this the z-spin.) After Alice performs her measurement, the z-spin of Bob's electron is definitely known, so it is an element of physical reality. Similarly, if Bob decides to measure spin of his electron along the x-axis, the x-spin of Alice's electron becomes an element of physical reality after the measurement. After such measurements, the conclusion that Alice's and Bob's electrons now have definite values of spin along both the X and Z axis simultaneously is inevitable.

We have seen that a quantum state cannot possess a definite value for both x-spin and z-spin. If quantum mechanics is a complete physical theory in the sense given above, x-spin and z-spin cannot be elements of reality at the same time. This means that Alice's decision — whether to perform her measurement along the x- or z-axis — has an instantaneous effect on the elements of physical reality at Bob's location. However, this violates another principle, that of locality.

Locality in the EPR experiment[editar | editar código-fonte]

The principle of locality states that physical processes occurring at one place should have no immediate effect on the elements of reality at another location. At first sight, this appears to be a reasonable assumption to make, as it seems to be a consequence of special relativity, which states that information can never be transmitted faster than the speed of light without violating causality. It is generally believed that any theory which violates causality would also be internally inconsistent, and thus deeply unsatisfactory.

It turns out that the usual rules for combining quantum mechanical and classical descriptions violate the principle of locality without violating causality. Causality is preserved because there is no way for Alice to transmit messages (i.e. information) to Bob by manipulating her measurement axis. Whichever axis she uses, she has a 50% probability of obtaining "+" and 50% probability of obtaining "-", completely at random; according to quantum mechanics, it is fundamentally impossible for her to influence what result she gets. Furthermore, Bob is only able to perform his measurement once: there is a fundamental property of quantum mechanics, known as the "no cloning theorem", which makes it impossible for him to make a million copies of the electron he receives, perform a spin measurement on each, and look at the statistical distribution of the results. Therefore, in the one measurement he is allowed to make, there is a 50% probability of getting "+" and 50% of getting "-", regardless of whether or not his axis is aligned with Alice's.

However, the principle of locality appeals powerfully to physical intuition, and Einstein, Podolsky and Rosen were unwilling to abandon it. Einstein derided the quantum mechanical predictions as "spooky action at a distance". The conclusion they drew was that quantum mechanics is not a complete theory.

In recent years, however, doubt has been cast on EPR's conclusion due to developments in understanding locality and especially quantum decoherence. The word locality has several different meanings in physics. For example, in quantum field theory "locality" means that quantum fields at different points of space do not interact with one another. However, quantum field theories that are "local" in this sense appear to violate the principle of locality as defined by EPR, but they nevertheless do not violate locality in a more general sense. Wavefunction collapse can be viewed as an epiphenomenon of quantum decoherence, which in turn is nothing more than an effect of the underlying local time evolution of the wavefunction of a system and all of its environment. Since the underlying behaviour doesn't violate local causality, it follows that neither does the additional effect of wavefunction collapse, whether real or apparent. Therefore, as outlined in the example above, neither the EPR experiment nor any quantum experiment demonstrates that faster-than-light signaling is possible.

Resolving the paradox[editar | editar código-fonte]

Hidden variables[editar | editar código-fonte]

There are several ways to resolve the EPR paradox. The one suggested by EPR is that quantum mechanics, despite its success in a wide variety of experimental scenarios, is actually an incomplete theory. In other words, there is some yet undiscovered theory of nature to which quantum mechanics acts as a kind of statistical approximation (albeit an exceedingly successful one). Unlike quantum mechanics, the more complete theory contains variables corresponding to all the "elements of reality". There must be some unknown mechanism acting on these variables to give rise to the observed effects of "non-commuting quantum observables", i.e. the Heisenberg uncertainty principle. Such a theory is called a hidden variable theory.

To illustrate this idea, we can formulate a very simple hidden variable theory for the above thought experiment. One supposes that the quantum spin-singlet states emitted by the source are actually approximate descriptions for "true" physical states possessing definite values for the z-spin and x-spin. In these "true" states, the electron going to Bob always has spin values opposite to the electron going to Alice, but the values are otherwise completely random. For example, the first pair emitted by the source might be "(+z, -x) to Alice and (-z, +x) to Bob", the next pair "(-z, -x) to Alice and (+z, +x) to Bob", and so forth. Therefore, if Bob's measurement axis is aligned with Alice's, he will necessarily get the opposite of whatever Alice gets; otherwise, he will get "+" and "-" with equal probability.

Assuming we restrict our measurements to the z and x axes, such a hidden variable theory is experimentally indistinguishable from quantum mechanics. In reality, of course, there is an (uncountably) infinite number of axes along which Alice and Bob can perform their measurements, so there has to be an infinite number of independent hidden variables. However, this is not a serious problem; we have formulated a very simplistic hidden variable theory, and a more sophisticated theory might be able to patch it up. It turns out that there is a much more serious challenge to the idea of hidden variables.

Bell's inequality[editar | editar código-fonte]

In 1964, John Bell showed that the predictions of quantum mechanics in the EPR thought experiment are significantly different from the predictions of a very broad class of hidden variable theories (the local hidden variable theories). Roughly speaking, quantum mechanics predicts much stronger statistical correlations between the measurement results performed on different axes than the hidden variable theories. These differences, expressed using inequality relations known as "Bell's inequalities", are in principle experimentally detectable. Later work by Eberhard showed that the key properties of local hidden variable theories that lead to Bell's inequalities are locality and counter-factual definiteness. Any theory in which these principles hold produces the inequalities. A. Fine subsequently showed that any theory satisfying the inequalities can be modeled by a local hidden variable theory.

After the publication of Bell's paper, a variety of experiments were devised to test Bell's inequalities. (As mentioned above, these experiments generally rely on photon polarization measurements.) All the experiments conducted to date have found behavior in line with the predictions of standard quantum mechanics.

However, Bell's theorem does not apply to all possible philosophically realist theories, although a common misconception touted by new agers is that quantum mechanics is inconsistent with all notions of philosophical realism. Realist interpretations of quantum mechanics are possible, although as discussed above, such interpretations must reject either locality or counter-factual definiteness. Mainstream physics prefers to keep locality while still maintaining a notion of realism that nevertheless rejects counter-factual definiteness. Examples of such mainstream realist interpretations are the consistent histories interpretation and the transactional interpretation. Fine's work showed that taking locality as a given there exist scenarios in which two statistical variables are correlated in a manner inconsistent with counter-factual definiteness and that such scenarios are no more mysterious than any other despite the inconsistency with counter-factual definiteness seeming 'counter-intuitive'. Violation of locality however is difficult to reconcile with special relativity and is thought to be incompatible with the principle of causality. On the other hand the Bohm interpretation of quantum mechanics instead keeps counter-factual definiteness while introducing a conjectured non-local mechanism called the 'quantum potential'. Some workers in the field have also attempted to formulate hidden variable theories that exploit loopholes in actual experiments, such as the assumptions made in interpreting experimental data although no such theory has been produced that can reproduce all the results of quantum mechanics.

There are also individual EPR-like experiments that have no local hidden variables explanation. Examples have been suggested by David Bohm and by Lucien Hardy.

"Acceptable theories", and the experiment[editar | editar código-fonte]

According to the present view of the situation, quantum mechanics simply contradicts Einstein's philosophical postulate that any acceptable physical theory should fulfill "local realism".

In the EPR paper (1935) the authors realized that quantum mechanics was non-acceptable in the sense of their above-mentioned assumptions, and Einstein thought erroneously that it could simply be augmented by 'hidden variables', without any further change, to get an acceptable theory. He pursued these ideas until the end of his life (1955), i.e. over twenty years.

In contrast, John Bell, in his 1964 paper, showed "once and for all" that quantum mechanics and Einstein's assumptions lead to different results, different by a factor of , for certain correlations. So the issue of "acceptability", up to this time mainly concerning theory (even philosophy), finally became experimentally decidable.

There are many Bell test experiments hitherto, e.g. those of Alain Aspect and others. They all show that pure quantum mechanics, and not Einstein's "local realism", is acceptable. Thus, according to Karl Popper these experiments falsify Einstein's philosophical assumptions, especially the ideas on "hidden variables", whereas quantum mechanics itself remains a good candidate for a theory, which is acceptable in a wider context.

But apparently an experiment, which would also classify Bohm's non-local quasi-classical theory as non-acceptable, is still lacking.

Implications for quantum mechanics[editar | editar código-fonte]

Most physicists today believe that quantum mechanics is correct, and that the EPR paradox is a "paradox" only because classical intuitions do not correspond to physical reality. How EPR is interpreted regarding locality depends on the interpretation of quantum mechanics one uses. In the Copenhagen interpretation, it is usually understood that instantaneous wavefunction collapse does occur. However, the view that there is no causal instantaneous effect has also been proposed within the Copenhagen interpretation: in this alternate view, measurement affects our ability to define (and measure) quantities in the physical system, not the system itself. In the many-worlds interpretation, a kind of locality is preserved, since the effects of irreversible operations such as measurement arise from the relativization of a global state to a subsystem such as that of an observer.

The EPR paradox has deepened our understanding of quantum mechanics by exposing the fundamentally non-classical characteristics of the measurement process. Prior to the publication of the EPR paper, a measurement was often visualized as a physical disturbance inflicted directly upon the measured system. For instance, when measuring the position of an electron, one imagines shining a light on it, thus disturbing the electron and producing the quantum mechanical uncertainties in its position. Such explanations, which are still encountered in popular expositions of quantum mechanics, are debunked by the EPR paradox, which shows that a "measurement" can be performed on a particle without disturbing it directly, by performing a measurement on a distant entangled particle.

Technologies relying on quantum entanglement are now being developed. In quantum cryptography, entangled particles are used to transmit signals that cannot be eavesdropped upon without leaving a trace. In quantum computation, entangled quantum states are used to perform computations in parallel, which may allow certain calculations to be performed much more quickly than they ever could be with classical computers.

Mathematical formulation[editar | editar código-fonte]

The above discussion can be expressed mathematically using the quantum mechanical formulation of spin. The spin degree of freedom for an electron is associated with a two-dimensional Hilbert space H, with each quantum state corresponding to a vector in that space. The operators corresponding to the spin along the x, y, and z direction, denoted Sx, Sy, and Sz respectively, can be represented using the Pauli matrices:

where stands for Planck's constant divided by 2π.

The eigenstates of Sz are represented as

- With qubits it looks:

and the eigenstates of Sx are represented as

- With qubits it looks:

The Hilbert space of the electron pair is , the tensor product of the two electrons' Hilbert spaces. The spin singlet state is

- With qubits it looks:

where the two terms on the right hand side are what we have referred to as state I and state II above. This is also commonly written as

- With qubits it looks:

From the above equations, it can be shown that the spin singlet can also be written as

- With qubits it looks:

where the terms on the right hand side are what we have referred to as state Ia and state IIa.

To illustrate how this leads to the violation of local realism, we need to show that after Alice's measurement of Sz (or Sx), Bob's value of Sz (or Sx) is uniquely determined, and therefore corresponds to an "element of physical reality". This follows from the principles of measurement in quantum mechanics. When Sz is measured, the system state ψ collapses into an eigenvector of Sz. If the measurement result is +z, this means that immediately after measurement the system state undergoes an orthogonal projection of ψ onto the space of states of the form

- With qubits it looks:

For the spin singlet, the new state is

- With qubits it looks:

Similarly, if Alice's measurement result is -z, a system undergoes an orthogonal projection onto

- With qubits it looks:

which means that the new state is

- With qubits it looks:

This implies that the measurement for Sz for Bob's electron is now determined. It will be -z in the first case or +z in the second case.

It remains only to show that Sx and Sz cannot simultaneously possess definite values in quantum mechanics. One may show in a straightforward manner that no possible vector can be an eigenvector of both matrices. More generally, one may use the fact that the operators do not commute,

along with the Heisenberg uncertainty relation

See also[editar | editar código-fonte]

References[editar | editar código-fonte]

Selected papers[editar | editar código-fonte]

- A. Aspect, Bell's inequality test: more ideal than ever, Nature 398 189 (1999). [5]

- J.S. Bell, On the Einstein-Poldolsky-Rosen paradox, Physics 1 195bbcv://prola.aps.org/abstract/PR/v48/i8/p696_1]

- P.H. Eberhard, Bell's theorem without hidden variables. Nuovo Cimento 38B1 75 (1977).

- P.H. Eberhard, Bell's theorem and the different concepts of locality. Nuovo Cimento 46B 392 (1978).

- A. Einstein, B. Podolsky, and N. Rosen, Can quantum-mechanical description of physical reality be considered complete? Phys. Rev. 47 777 (1935). [6]

- A. Fine, Hidden Variables, Joint Probability, and the Bell Inequalities. Phys. Rev. Lett. 48, 291 (1982).[7]

- A. Fine, Do Correlations need to be explained?, in Philosophical Consequences of Quantum Theory: Reflections on Bell's Theorem, edited by Cushing & McMullin (University of Notre Dame Press, 1986).

- L. Hardy, Nonlocality for two particles without inequalities for almost all entangled states. Phys. Rev. Lett. 71 1665 (1993).[8]

- M. Mizuki, A classical interpretation of Bell's inequality. Annales de la Fondation Louis de Broglie 26 683 (2001).

- P. Pluch, "Theory for Quantum Probability", PhD Thesis University of Klagenfurt (2006)

- M. A. Rowe, D. Kielpinski, V. Meyer, C. A. Sackett, W. M. Itano, C. Monroe and D. J. Wineland, Experimental violation of a Bell's inequality with efficient detection, Nature 409, 791-794 (15 February 2001). [9]

- M. Smerlak, C. Rovelli, Relational EPR [10]

Notes[editar | editar código-fonte]

- ↑ The God Particle: If the Universe is the Answer, What is the Question - pages 187 to 189, and 21 by Leon Lederman with Dick Teresi (copyright 1993) Houghton Mifflin Company

- ↑ The Einstein-Podolsky-Rosen Argument in Quantum Theory; 1.2 The argument in the text;

http://plato.stanford.edu/entries/qt-epr/#1.2 - ↑ Quoted in Kaiser, David. "Bringing the human actors back on stage: the personal context of the Einstein-Bohr debate," British Journal for the History of Science 27 (1994): 129-152, on page 147.

Books[editar | editar código-fonte]

- J.S. Bell, Speakable and Unspeakable in Quantum Mechanics (Cambridge University Press, 1987). ISBN 0-521-36869-3

- J.J. Sakurai, Modern Quantum Mechanics (Addison-Wesley, 1994), pp. 174–187, 223-232. ISBN 0-201-53929-2

- F. Selleri, Quantum Mechanics Versus Local Realism: The Einstein-Podolsky-Rosen Paradox (Plenum Press, New York, 1988). ISBN 0-306-42739-7

- Roger Penrose, The Road to Reality (Alfred A. Knopf, 2005; Vintage Books, 2006). ISBN 0-679-45443-8

External links[editar | editar código-fonte]

- The original EPR paper

- A. Fine, The Einstein-Podolsky-Rosen Argument in Quantum Theory

- Abner Shimony, Bell’s Theorem (2004)

- EPR, Bell & Aspect: The Original References

- Does Bell's Inequality Principle rule out local theories of quantum mechanics? From the Usenet Physics FAQ.

- Theoretical use of EPR in teleportation

- Effective use of EPR in cryptography

Category:Fundamental physics concepts Category:Physical paradoxes Category:Thought experiments Category:Quantum measurement Category:Albert Einstein Category:Articles with Alice and Bob explanations pt:Paradoxo EPR

Predefinição:Quantum mechanics Quantum entanglement is a possible property of a quantum mechanical state of a system of two or more objects in which the quantum states of the constituting objects are linked together so that one object can no longer be adequately described without full mention of its counterpart — even though the individual objects may be spatially separated. This interconnection leads to non-classical correlations between observable physical properties of remote systems, often referred to as nonlocal correlations.

For example, quantum mechanics holds that observables such as spin are indeterminate until such time as some physical intervention is made to measure the spin of the object in question. In the singlet state of two spins it is equally likely that any given particle will be observed to be spin-up as that it will be spin-down. Measuring any number of particles will result in an unpredictable series of measures that will tend more and more closely to half up and half down. However, if this experiment is done with entangled particles the results are quite different. When two members of an entangled pair are measured, one will always be spin-up and the other will be spin-down.{{carece de fontes}} The distance between the two particles is irrelevant.

Theories involving 'hidden variables' have been proposed in order to explain this result; these hidden variables account for the spin of each particle, and are determined when the entangled pair is created. It may appear then that the hidden variables must be in communication no matter how far apart the particles are, that the hidden variable describing one particle must be able to change instantly when the other is measured. If the hidden variables stop interacting when they are far apart, the statistics of multiple measurements must obey an inequality (called Bell's inequality), which is, however, violated — both by quantum mechanical theory and in experiments.{{carece de fontes}}

When pairs of particles are generated by the decay of other particles, naturally or through induced collision, these pairs may be termed "entangled", in that such pairs often necessarily have linked and opposite qualities, i.e. of spin or charge. The assumption that measurement in effect "creates" the state of the measured quality goes back to the arguments of, among others: Schrödinger, and Einstein, Podolsky, and Rosen{{carece de fontes}} (see EPR paradox) concerning Heisenberg's uncertainty principle and its relation to observation (see also the Copenhagen interpretation). The analysis of entangled particles by means of Bell's theorem, can lead to an impression of non-locality (that is, that there exists a connection between the members of such a pair that defies both classical and relativistic concepts of space and time). This is reasonable if it is assumed that each particle departs the scene of the pair's creation in an ambiguous state (as per a possible interpretation of Heisenberg). In such case, either dichotomous outcome of a given measurement remains a possibility; only measurement itself would precipitate a distinct value. On the other hand, if each particle departs the scene of its "entangled creation" with properties that would unambiguously determine the value of the quality to be subsequently measured, then a postulated instantaneous transmission of information across space and time would not be required to account for the result. The Bohm interpretation postulates that a guide wave exists connecting what are perceived as individual particles such that the supposed hidden variables are actually the particles themselves existing as functions of that wave.

Observation of wavefunction collapse can lead to the impression that measurements performed on one system instantaneously influence other systems entangled with the measured system, even when far apart. Yet another interpretation of this phenomenon is that quantum entanglement does not necessarily enable the transmission of classical information faster than the speed of light because a classical information channel is required to complete the process.{{carece de fontes}}

Background[editar | editar código-fonte]

Entanglement is one of the properties of quantum mechanics that caused Einstein and others to dislike the theory. In 1935, Einstein, Podolsky, and Rosen formulated the EPR paradox, a quantum-mechanical thought experiment with a highly counterintuitive and apparently nonlocal outcome, in response to Niels Bohr's advocacy of the belief that quantum mechanics as a theory was complete.[1] Einstein famously derided entanglement as "spukhafte Fernwirkung"{{carece de fontes}} or "spooky action at a distance". It was his belief that future mathematicians would discover that quantum entanglement entailed nothing more or less than an error in their calculations. As he once wrote: "I find the idea quite intolerable that an electron exposed to radiation should choose of its own free will, not only its moment to jump off, but also its direction. In that case, I would rather be a cobbler, or even an employee in a gaming house, than a physicist".[2]

On the other hand, quantum mechanics has been highly successful in producing correct experimental predictions, and the strong correlations predicted by the theory of quantum entanglement have now in fact been observed.{{carece de fontes}} One apparent way to explain found correlations in line with the predictions of quantum entanglement is an approach known as "local hidden variable theory", in which unknown, shared, local parameters would cause the correlations. However, in 1964 John Stewart Bell derived an upper limit, known as Bell's inequality, on the strength of correlations for any theory obeying "local realism". Quantum entanglement can lead to stronger correlations that violate this limit, so that quantum entanglement is experimentally distinguishable from a broad class of local hidden-variable theories.{{carece de fontes}} Results of subsequent experiments have overwhelmingly supported quantum mechanics. However, there may be experimental problems, known as "loopholes", that affect the validity of these experimental findings. High-efficiency and high-visibility experiments are now in progressPredefinição:Specify that should confirm or invalidate the existence of those loopholes. For more information, see the article on experimental tests of Bell's inequality.

Observations pertaining to entangled states appear to conflict with the property of relativity that information cannot be transferred faster than the speed of light. Although two entangled systems appear to interact across large spatial separations, the current state of belief is that no useful information can be transmitted in this way, meaning that causality cannot be violated through entanglement. This is the statement of the no-communication theorem.

Even if information cannot be transmitted through entanglement alone, it is believed[quem?] that it is possible to transmit information using a set of entangled states used in conjunction with a classical information channel. This process is known as quantum teleportation. Despite its name, quantum teleportation may still not permit information to be transmitted faster than light, because a classical information channel is required to complete the process.

In addition experiments are underway to see if entanglement is the result of retrocausality.[3][4]

Pure states[editar | editar código-fonte]

Predefinição:Tooabstract

The following discussion builds on the theoretical framework developed in the articles bra-ket notation and mathematical formulation of quantum mechanics.{{carece de fontes}}

Consider two noninteracting systems and , with respective Hilbert spaces and . The Hilbert space of the composite system is the tensor product

If the first system is in state and the second in state , the state of the composite system is

States of the composite system which can be represented in this form are called separable states, or product states.

Not all states are product states. Fix a basis for and a basis for . The most general state in is of the form

- .

This state is separable if yielding and It is inseparable if If a state is inseparable, it is called an entangled state.

For example, given two basis vectors of and two basis vectors of , the following is an entangled state:

- .

If the composite system is in this state, it is impossible to attribute to either system or system a definite pure state. Instead, their states are superposed with one another. In this sense, the systems are "entangled".

Now suppose Alice is an observer for system , and Bob is an observer for system . If Alice makes a measurement in the eigenbasis of A, there are two possible outcomes, occurring with equal probability:{{carece de fontes}}

- Alice measures 0, and the state of the system collapses to .

- Alice measures 1, and the state of the system collapses to .

If the former occurs, then any subsequent measurement performed by Bob, in the same basis, will always return 1. If the latter occurs, (Alice measures 1) then Bob's measurement will return 0 with certainty. Thus, system B has been altered by Alice performing a local measurement on system A. This remains true even if the systems A and B are spatially separated. This is the foundation of the EPR paradox.

The outcome of Alice's measurement is random. Alice cannot decide which state to collapse the composite system into, and therefore cannot transmit information to Bob by acting on her system. Causality is thus preserved, in this particular scheme. For the general argument, see no-communication theorem.

In some formal mathematical settingsPredefinição:Specify, it is noted that the correct setting for pure states in quantum mechanics is projective Hilbert space endowed with the Fubini-Study metric. The product of two pure states is then given by the Segre embedding.

Ensembles[editar | editar código-fonte]

As mentioned above, a state of a quantum system is given by a unit vector in a Hilbert space. More generally, if one has a large number of copies of the same system, then the state of this ensemble is described by a density matrix, which is a positive matrix, or a trace class when the state space is infinite dimensional, and has trace 1. Again, by the spectral theorem, such a matrix takes the general form:

where the 's sum up to 1, and in the infinite dimensional case, we would take the closure of such states in the trace norm. We can interpret as representing an ensemble where is the proportion of the ensemble whose states are . When a mixed state has rank 1, it therefore describes a pure ensemble. When there is less than total information about the state of a quantum system we need density matrices to represent the state.

Following the definition in previous section, for a bipartite composite system, mixed states are just density matrices on . Extending the definition of separability from the pure case, we say that a mixed state is separable if it can be written as

where 's and 's are they themselves states on the subsystems A and B respectively. In other words, a state is separable if it is probability distribution over uncorrelated states, or product states. We can assume without loss of generality that and are pure ensembles. A state is then said to be entangled if it is not separable. In general, finding out whether or not a mixed state is entangled is considered difficult. Formally, it has been shown to be NP-hard. For the and cases, a necessary and sufficient criterion for separability is given by the famous Positive Partial Transpose (PPT) condition.

Experimentally, a mixed ensemble might be realized as follows. Consider a "black-box" apparatus that spits electrons towards an observer. The electrons' Hilbert spaces are identical. The apparatus might produce electrons that are all in the same state; in this case, the electrons received by the observer are then a pure ensemble. However, the apparatus could produce electrons in different states. For example, it could produce two populations of electrons: one with state with spins aligned in the positive direction, and the other with state with spins aligned in the negative direction. Generally, this is a mixed ensemble, as there can be any number of populations, each corresponding to a different state.

Reduced density matrices[editar | editar código-fonte]

Consider as above systems and each with a Hilbert space , . Let the state of the composite system be

As indicated above, in general there is no way to associate a pure state to the component system . However, it still is possible to associate a density matrix. Let

- .

which is the projection operator onto this state. The state of is the partial trace of over the basis of system :

- .

is sometimes called the reduced density matrix of on subsystem A. Colloquially, we "trace out" system B to obtain the reduced density matrix on A.

For example, the density matrix of for the entangled state discussed above is

This demonstrates that, as expected, the reduced density matrix for an entangled pure ensemble is a mixed ensemble. Also not surprisingly, the density matrix of for the pure product state discussed above is

In general, a bipartite pure state ρ is entangled if and only if one, meaning both, of its reduced states are mixed states.

Entropy[editar | editar código-fonte]

In this section we briefly discuss entropy of a mixed state and how it can be viewed as a measure of entanglement.

Definition[editar | editar código-fonte]

In classical information theory, to a probability distribution , one can associate the Shannon entropy:{{carece de fontes}}

Since a mixed state ρ is a probability distribution over an ensemble, this leads naturally to the definition of the von Neumann entropy:

where the logarithm is again taken in base 2. In general, to calculate , one would use the Borel functional calculus. If ρ acts on a finite dimensional Hilbert space and has eigenvalues , then we recover the Shannon entropy:

- .

Since an event of probability 0 should not contribute to the entropy, we adopt the convention that . This extends to the infinite dimensional case as well: if ρ has spectral resolution , then we assume the same convention when calculating

As in statistical mechanics, one can say that the more uncertainty (number of microstates) the system should possess, the larger the entropy. For example, the entropy of any pure state is zero, which is unsurprising since there is no uncertainty about a system in a pure state. The entropy of any of the two subsystems of the entangled state discussed above is (which can be shown to be the maximum entropy for mixed states).

As a measure of entanglement[editar | editar código-fonte]

Entropy provides one tool which can be used to quantify entanglement, although other entanglement measures exist.{{carece de fontes}} If the overall system is pure, the entropy of one subsystem can be used to measure its degree of entanglement with the other subsystems.

For bipartite pure states, the von Neumann entropy of reduced states is the unique measure of entanglement in the sense that it is the only function on the family of states that satisfies certain axioms required of an entanglement measure.

It is a classical result that the Shannon entropy achieves its maximum at, and only at, the uniform probability distribution {1/n,...,1/n}. Therefore, a bipartite pure state

is said to be a maximally entangled state if there exists some local bases on H such that the reduced state of ρ is the diagonal matrix

For mixed states, the reduced von Neumann entropy is not the unique entanglement measure.

As an aside, the information-theoretic definition is closely related to entropy in the sense of statistical mechanics{{carece de fontes}} (comparing the two definitions, we note that, in the present context, it is customary to set the Boltzmann constant ). For example, by properties of the Borel functional calculus, we see that for any unitary operator U,

Indeed, without the above property, the von Neumann entropy would not be well-defined. In particular, U could be the time evolution operator of the system, i.e.

where H is the Hamiltonian of the system. This associates the reversibility of a process with its resulting entropy change, i.e. a process is reversible if, and only if, it leaves the entropy of the system invariant. This provides a connection between quantum information theory and thermodynamics.

Applications of entanglement[editar | editar código-fonte]

Entanglement has many applications in quantum information theory. Mixed state entanglement can be viewed as a resource for quantum communication. With the aid of entanglement, otherwise impossible tasks may be achieved. Among the best known applications of entanglement are superdense coding and quantum state teleportation. Efforts to quantify this resource are often termed entanglement theory.[5]

[6]

Quantum entanglement also has many different applications in the emerging technologies of quantum computing and quantum cryptography, and has been used to realize quantum teleportation experimentally[7]. At the same time, it prompts some of the more philosophically oriented discussions concerning quantum theory.{{carece de fontes}} The correlations predicted by quantum mechanics, and observed in experiment, reject the principle of local realism, which is that information about the state of a system can only be mediated by interactions in its immediate surroundings and that the state of a system exists and is well-defined before any measurement. Different views of what is actually occurring in the process of quantum entanglement can be related to different interpretations of quantum mechanics. In the previously standard one, the Copenhagen interpretation, quantum mechanics is neither "real" (since measurements do not state, but instead prepare properties of the system) nor "local" (since the state vector comprises the simultaneous probability amplitudes for all positions, e.g. ); the properties of entanglement are some of the many reasons why the Copenhagen Interpretation is no longer considered standard by a large proportion of the scientific community.

Other uses:

- Quantum computers use entanglement and superposition.

- The Reeh-Schlieder theorem of quantum field theory is sometimes seen as the QFT analogue of quantum entanglement.

See also[editar | editar código-fonte]

- Entanglement witness

- Separable states

- Squashed entanglement

- Quantum coherence

- Action at a distance (physics)

- Ghirardi-Rimini-Weber theory

- Quantum pseudo-telepathy

- Entanglement distillation

- Quantum mysticism

References[editar | editar código-fonte]

Predefinição:More footnotes Specific references:

- ↑ Einstein A, Podolsky B, Rosen N (1935). «Can Quantum-Mechanical Description of Physical Reality Be Considered Complete?». Phys. Rev. 47 (10): 777–780. doi:10.1103/PhysRev.47.777

- ↑ Fred R. Shapiro, Joseph Epstein (2006). The Yale Book of Quotations. [S.l.]: Yale University Press. p. 228. ISBN 0300107986

- ↑ Paulson, Tom (15 de novembro de 2006). «Going for a blast in the real past». Seattle Post-Intelligencer. Consultado em 19 de dezembro de 2006

- ↑ Boyle, Alan (21 de novembro de 2006). «Time-travel physics seems stranger than fiction». MSNBC. Consultado em 19 de dezembro de 2006

- ↑ Entanglement Theory Tutorials from Imperial College London

- ↑ M.B. Plenio and S. Virmani, An introduction to entanglement measures, Quant. Inf. Comp. 7, 1 (2007) [1]

- ↑ Dik Bouwmeester, Jian-Wei Pan, Klaus Mattle, Manfred Eibl, Harald Weinfurter & Anton Zeilinger, Experimental Quantum Teleportation, Nature vol.390, 11 Dec 1997, pp.575. (Summarized at http://www.quantum.univie.ac.at/research/photonentangle/teleport/)

General references:

- Horodecki M, Horodecki P, Horodecki R (1996). «Separability of mixed states: necessary and sufficient conditions». Physics Letters. A: 210

- Gurvits L (2003). «Classical deterministic complexity of Edmonds' Problem and quantum entanglement». Proceedings of the thirty-fifth annual ACM symposium on Theory of computing. 10 páginas. doi:10.1145/780542.780545

- Bengtsson I, Zyczkowski K (2006). «Geometry of Quantum States». An Introduction to Quantum Entanglement. Cambridge: Cambridge University Press

- Steward EG (24 de março de 2008). Quantum Mechanics: Its Early Development and the Road to Entanglement. [S.l.]: Imperial College Press. ISBN 978-1860949784

- Horodecki R, Horodecki P, Horodecki M, Horodecki K (2007). «Quantum entanglement». Rev. Mod. Phys.

- Plenio MB, Virmani S (2007). «An introduction to entanglement measures». Quant. Inf. Comp. 7, 1 (2007)

External links[editar | editar código-fonte]

- Quantum Entanglement at Stanford Encyclopedia of Philosophy

- Entanglement experiment with photon pairs - interactive

- Multiple entanglement and quantum repeating

- How to entangle photons experimentally

- Quantum Entanglement

- Quantum Entanglement and Bell's Theorem at MathPages

- Recorded research seminars at Imperial College relating to quantum entanglement

- How Quantum Entanglement Works

- Quantum Entanglement and Decoherence: 3rd International Conference on Quantum Information (ICQI)

- The original EPR paper

- Ion trapping quantum information processing

- IEEE Spectrum On-line: The trap technique

- Was Einstein Wrong?: A Quantum Threat to Special Relativity

Category:Quantum information science Category:Quantum mechanics pt:Entrelaçamento quântico

Predefinição:Quantum mechanics

An interpretation of quantum mechanics is a statement which attempts to explain how quantum mechanics informs our understanding of nature. Although quantum mechanics has received thorough experimental testing, many of these experiments are open to different interpretations. There exist a number of contending schools of thought, differing over whether quantum mechanics can be understood to be deterministic, which elements of quantum mechanics can be considered "real", and other matters.

Although today this question is of special interest to philosophers of physics, many physicists continue to show a strong interest in the subject. Physicists usually consider an interpretation of quantum mechanics as an interpretation of the mathematical formalism of quantum mechanics, specifying the physical meaning of the mathematical entities of the theory.

Historical background[editar | editar código-fonte]

The definition of terms used by researchers in quantum theory (such as wavefunctions and matrix mechanics) progressed through many stages. For instance, Schrödinger originally viewed the wavefunction associated with the electron as corresponding to the charge density of an object smeared out over an extended, possibly infinite, volume of space. Max Born interpreted it as simply corresponding to a probability distribution. These are two different interpretations of the wavefunction. In one it corresponds to a material field, in the other it "just" corresponds to a probability density.

Most physicists think quantum mechanics does not need interpretation.{{carece de fontes}} More precisely, they think it only requires an instrumentalist interpretation. Besides the instrumentalist interpretation, the Copenhagen interpretation is the most popular among physicists, followed by the many worlds and consistent histories interpretations.{{carece de fontes}} But it is also true that most physicists consider non-instrumental questions (in particular ontological questions) to be irrelevant to physics.{{carece de fontes}} They fall back on David Mermin's expression: "shut up and calculate" often attributed (perhaps erroneously) to Richard Feynman (see [11]).

Obstructions to direct interpretation[editar | editar código-fonte]

The difficulties of interpretation reflect a number of points about the orthodox description of quantum mechanics, including:

- The abstract, mathematical nature of the description of quantum mechanics.

- The existence of what appear to be non-deterministic and irreversible processes in quantum mechanics.

- The phenomenon of entanglement, and in particular, the correlations between remote events that are not expected in classical theory.

- The complementarity of possible descriptions of reality.

- The essential role played by observers and the process of measurement in the theory.

First, the accepted mathematical structure of quantum mechanics is based on fairly abstract mathematics, such as Hilbert spaces and operators on those Hilbert spaces. In classical mechanics and electromagnetism, on the other hand, properties of a point mass or properties of a field are described by real numbers or functions defined on two or three dimensional sets. These have direct, spatial meaning, and in these theories there seems to be less need to provide a special interpretation for those numbers or functions.

Further, the process of measurement plays an essential role in the theory. Put simply: the world around us seems to be in a specific state, yet quantum mechanics describes it with wave functions governing the probabilities of values. In general the wave-function assigns non-zero probabilities to all possible values for a given physical quantity, such as position. How then is it that we come to see a particle at a specific position when its wave function is spread across all space? In order to describe how specific outcomes arise from the probabilities, the direct interpretation introduces the concept of measurement. According to the theory, wave functions interact with each other and evolve in time according to the laws of quantum mechanics until a measurement is performed, at which time the system will take on one of the possible values with probability governed by the wave-function. Measurement can interact with the system state in somewhat peculiar ways, as is illustrated by the double-slit experiment.

Thus the mathematical formalism used to describe the time evolution of a non-relativistic system proposes two somewhat different kinds of transformations:

- Reversible transformations described by unitary operators on the state space. These transformations are determined by solutions to the Schrödinger equation.

- Non-reversible and unpredictable transformations described by mathematically more complicated transformations (see quantum operations). Examples of these transformations are those that are undergone by a system as a result of measurement.

A restricted version of the problem of interpretation in quantum mechanics consists in providing some sort of plausible picture, just for the second kind of transformation. This problem may be addressed by purely mathematical reductions, for example by the many-worlds or the consistent histories interpretations.

In addition to the unpredictable and irreversible character of measurement processes, there are other elements of quantum physics that distinguish it sharply from classical physics and which cannot be represented by any classical picture. One of these is the phenomenon of entanglement, as illustrated in the EPR paradox, which seemingly violates principles of local causality [1].

Another obstruction to direct interpretation is the phenomenon of complementarity, which seems to violate basic principles of propositional logic. Complementarity says there is no logical picture (obeying classical propositional logic) that can simultaneously describe and be used to reason about all properties of a quantum system S. This is often phrased by saying that there are "complementary" sets A and B of propositions that can describe S, but not at the same time. Examples of A and B are propositions involving a wave description of S and a corpuscular description of S. The latter statement is one part of Niels Bohr's original formulation, which is often equated to the principle of complementarity itself.

Complementarity is not usually taken to mean that classical logic fails, although Hilary Putnam did take that view in his paper Is logic empirical?. Instead complementarity means that composition of physical properties for S (such as position and momentum both having values in certain ranges) using propositional connectives does not obey rules of classical propositional logic. As is now well-known (Omnès, 1999) the "origin of complementarity lies in the noncommutativity of operators" describing observables in quantum mechanics.

Problematic status of pictures and interpretations[editar | editar código-fonte]

The precise ontological status, of each one of the interpreting pictures, remains a matter of philosophical argument.

In other words, if we interpret the formal structure X of quantum mechanics by means of a structure Y (via a mathematical equivalence of the two structures), what is the status of Y? This is the old question of saving the phenomena, in a new guise.

Some physicists, for example Asher Peres and Chris Fuchs, seem to argue that an interpretation is nothing more than a formal equivalence between sets of rules for operating on experimental data. This would suggest that the whole exercise of interpretation is unnecessary.

Instrumentalist interpretation[editar | editar código-fonte]

Any modern scientific theory requires at the very least an instrumentalist description which relates the mathematical formalism to experimental practice and prediction. In the case of quantum mechanics, the most common instrumentalist description is an assertion of statistical regularity between state preparation processes and measurement processes. That is, if a measurement of a real-valued quantity is performed many times, each time starting with the same initial conditions, the outcome is a well-defined probability distribution over the real numbers; moreover, quantum mechanics provides a computational instrument to determine statistical properties of this distribution, such as its expectation value.

Calculations for measurements performed on a system S postulate a Hilbert space H over the complex numbers. When the system S is prepared in a pure state, it is associated with a vector in H. Measurable quantities are associated with Hermitian operators acting on H: these are referred to as observables.

Repeated measurement of an observable A for S prepared in state ψ yields a distribution of values. The expectation value of this distribution is given by the expression

This mathematical machinery gives a simple, direct way to compute a statistical property of the outcome of an experiment, once it is understood how to associate the initial state with a Hilbert space vector, and the measured quantity with an observable (that is, a specific Hermitian operator).

As an example of such a computation, the probability of finding the system in a given state is given by computing the expectation value of a (rank-1) projection operator

The probability is then the non-negative real number given by

By abuse of language, the bare instrumentalist description can be referred to as an interpretation, although this usage is somewhat misleading since instrumentalism explicitly avoids any explanatory role; that is, it does not attempt to answer the question of what quantum mechanics is talking about.

Summary of common interpretations of QM[editar | editar código-fonte]

Properties of interpretations[editar | editar código-fonte]

An interpretation can be characterized by whether it satisfies certain properties, such as:

- Realism

- Completeness

- Local realism

- Determinism

To explain these properties, we need to be more explicit about the kind of picture an interpretation provides. To that end we will regard an interpretation as a correspondence between the elements of the mathematical formalism M and the elements of an interpreting structure I, where:

- The mathematical formalism consists of the Hilbert space machinery of ket-vectors, self-adjoint operators acting on the space of ket-vectors, unitary time dependence of ket-vectors and measurement operations. In this context a measurement operation can be regarded as a transformation which carries a ket-vector into a probability distribution on ket-vectors. See also quantum operations for a formalization of this concept.

- The interpreting structure includes states, transitions between states, measurement operations and possibly information about spatial extension of these elements. A measurement operation here refers to an operation which returns a value and results in a possible system state change. Spatial information, for instance would be exhibited by states represented as functions on configuration space. The transitions may be non-deterministic or probabilistic or there may be infinitely many states. However, the critical assumption of an interpretation is that the elements of I are regarded as physically real.

In this sense, an interpretation can be regarded as a semantics for the mathematical formalism.

In particular, the bare instrumentalist view of quantum mechanics outlined in the previous section is not an interpretation at all since it makes no claims about elements of physical reality.

The current use in physics of "completeness" and "realism" is often considered to have originated in the paper (Einstein et al., 1935) which proposed the EPR paradox. In that paper the authors proposed the concept "element of reality" and "completeness" of a physical theory. Though they did not define "element of reality", they did provide a sufficient characterization for it, namely a quantity whose value can be predicted with certainty before measuring it or disturbing it in any way. EPR define a "complete physical theory" as one in which every element of physical reality is accounted for by the theory. In the semantic view of interpretation, an interpretation of a theory is complete if every element of the interpreting structure is accounted for by the mathematical formalism. Realism is a property of each one of the elements of the mathematical formalism; any such element is real if it corresponds to something in the interpreting structure. For instance, in some interpretations of quantum mechanics (such as the many-worlds interpretation) the ket vector associated to the system state is assumed to correspond to an element of physical reality, while in others it does not.

Determinism is a property characterizing state changes due to the passage of time, namely that the state at an instant of time in the future is a function of the state at the present (see time evolution). It may not always be clear whether a particular interpreting structure is deterministic or not, precisely because there may not be a clear choice for a time parameter. Moreover, a given theory may have two interpretations, one of which is deterministic, and the other not.

Local realism has two parts:

- The value returned by a measurement corresponds to the value of some function on the state space. Stated in another way, this value is an element of reality;

- The effects of measurement have a propagation speed not exceeding some universal bound (e.g., the speed of light). In order for this to make sense, measurement operations must be spatially localized in the interpreting structure.

A precise formulation of local realism in terms of a local hidden variable theory was proposed by John Bell.

Bell's theorem, combined with experimental testing, restricts the kinds of properties a quantum theory can have. For instance, the experimental rejection of Bell's theorem implies that quantum mechanics cannot satisfy local realism.

Ensemble interpretation, or statistical interpretation[editar | editar código-fonte]

The Ensemble interpretation, or statistical interpretation, can be viewed as a minimalist interpretation. That is, it claims to make the fewest assumptions associated with the standard mathematical formalization. At its heart, it takes the statistical interpretation of Born to the fullest extent. The interpretation states that the wave function does not apply to an individual system, or for example, a single particle, but is an abstract mathematical, statistical quantity that only applies to an ensemble of similar prepared systems or particles. Probably the most notable supporter of such an interpretation was Einstein:

The attempt to conceive the quantum-theoretical description as the complete description of the individual systems leads to unnatural theoretical interpretations, which become immediately unnecessary if one accepts the interpretation that the description refers to ensembles of systems and not to individual systems.— Einstein in Albert Einstein: Philosopher-Scientist, ed. P.A. Schilpp (Harper & Row, New York)

Probably the most prominent current advocate of the ensemble interpretation is Leslie E. Ballentine, Professor at Simon Fraser University, and writer of the graduate level text book Quantum Mechanics, A Modern Development.

Experimental evidence favouring the ensemble interpretation is provided in a particularly clear way in Akira Tonomura's Video clip 1[12], presenting results of a double-slit experiment with an ensemble of individual electrons. It is evident from this experiment that, since the quantum mechanical wave function describes the final interference pattern, it must describe the ensemble rather than an individual electron, the latter being seen to yield a pointlike impact on a screen.

The Copenhagen interpretation[editar | editar código-fonte]

The Copenhagen interpretation is the "standard" interpretation of quantum mechanics formulated by Niels Bohr and Werner Heisenberg while collaborating in Copenhagen around 1927. Bohr and Heisenberg extended the probabilistic interpretation of the wavefunction, proposed by Max Born. The Copenhagen interpretation rejects questions like "where was the particle before I measured its position" as meaningless. The measurement process randomly picks out exactly one of the many possibilities allowed for by the state's wave function.

Participatory Anthropic Principle (PAP)[editar | editar código-fonte]

Predefinição:Main article Viewed by some as mysticism (see "consciousness causes collapse"), Wheeler's Participatory Anthropic Principle is the speculative theory that observation by a conscious observer is responsible for the wavefunction collapse. It is an attempt to solve Wigner's friend paradox by simply stating that collapse occurs at the first "conscious" observer. Supporters claim PAP is not a revival of substance dualism, since (in one ramification of the theory) consciousness and objects are entangled and cannot be considered as distinct. Although such an idea could be added to other interpretations of quantum mechanics, PAP was added to the Copenhagen interpretation (Wheeler studied in Copenhagen under Niels Bohr in the 1930s). It is possible an experiment could be devised to test this theory, since it depends on an observer to collapse a wavefunction. The observer has to be conscious, but whether Schrödinger's cat or a person is necessary would be part of the experiment (hence a successful experiment could also define consciousness). However, the experiment would need to be carefully designed as, in Wheeler's view, it would need to ensure for an unobserved event that it remained unobserved for all time [2].

Consistent histories[editar | editar código-fonte]

The consistent histories generalizes the conventional Copenhagen interpretation and attempts to provide a natural interpretation of quantum cosmology. The theory is based on a consistency criterion that allows the history of a system to be described so that the probabilities for each history obey the additive rules of classical probability. It is claimed to be consistent with the Schrödinger equation.

According to this interpretation, the purpose of a quantum-mechanical theory is to predict the relative probabilities of various alternative histories.

Objective collapse theories[editar | editar código-fonte]

Objective collapse theories differ from the Copenhagen interpretation in regarding both the wavefunction and the process of collapse as ontologically objective. In objective theories, collapse occurs randomly ("spontaneous localization"), or when some physical threshold is reached, with observers having no special role. Thus, they are realistic, indeterministic, no-hidden-variables theories. The mechanism of collapse is not specified by standard quantum mechanics, which needs to be extended if this approach is correct, meaning that Objective Collapse is more of a theory than an interpretation. Examples include the Ghirardi-Rimini-Weber theory[3] and the Penrose interpretation.[4]

Many worlds[editar | editar código-fonte]

The many-worlds interpretation (or MWI) is an interpretation of quantum mechanics that rejects the non-deterministic and irreversible wavefunction collapse associated with measurement in the Copenhagen interpretation in favor of a description in terms of quantum entanglement and reversible time evolution of states. The phenomena associated with measurement are claimed to be explained by decoherence which occurs when states interact with the environment. As result of the decoherence the world-lines of macroscopic objects repeatedly split into mutually unobservable, branching histories—distinct universes within a greater multiverse.

Stochastic mechanics[editar | editar código-fonte]

An entirely classical derivation and interpretation of the Schrödinger equation by analogy with Brownian motion was suggested by Princeton University professor Edward Nelson in 1966 (“Derivation of the Schrödinger Equation from Newtonian Mechanics”, Phys. Rev. 150, 1079-1085). Similar considerations were published already before, e.g. by R. Fürth (1933), I. Fényes (1952), Walter Weizel (1953), and are referenced in Nelson's paper. More recent work on the subject can be found in M. Pavon, “Stochastic mechanics and the Feynman integral”, J. Math. Phys. 41, 6060-6078 (2000). An alternative stochastic interpretation was suggested by Roumen Tsekov[5].

The decoherence approach[editar | editar código-fonte]

Predefinição:Main article Decoherence occurs when a system interacts with its environment, or any complex external system, in such a thermodynamically irreversible way that ensures different elements in the quantum superposition of the system+environment's wave function can no longer (or are extremely unlikely to) interfere with each other. Decoherence does not provide a mechanism for the actual wave function collapse; rather, it is claimed that it provides a mechanism for the appearance of wave function collapse. The quantum nature of the system is simply "leaked" into the environment so that a total superposition of the wave function still exists, but cannot be detected by experiments that (so far) can be carried out in practice.

Many minds[editar | editar código-fonte]

The many-minds interpretation of quantum mechanics extends the many-worlds interpretation by proposing that the distinction between worlds should be made at the level of the mind of an individual observer.

Quantum logic[editar | editar código-fonte]

Quantum logic can be regarded as a kind of propositional logic suitable for understanding the apparent anomalies regarding quantum measurement, most notably those concerning composition of measurement operations of complementary variables. This research area and its name originated in the 1936 paper by Garrett Birkhoff and John von Neumann, who attempted to reconcile some of the apparent inconsistencies of classical boolean logic with the facts related to measurement and observation in quantum mechanics.

The Bohm interpretation[editar | editar código-fonte]

The Bohm interpretation of quantum mechanics is a theory by David Bohm in which particles, which always have positions, are guided by the wavefunction. The wavefunction evolves according to the Schrödinger wave equation, which never collapses. The theory takes place in a single space-time, is non-local, and is deterministic. The simultaneous determination of a particle's position and velocity is subject to the usual uncertainty principle constraints, which is why the theory was originally called one of "hidden" variables.

It has been shown to be empirically equivalent to the Copenhagen interpretation. The measurement problem is claimed to be resolved by the particles having definite positions at all times [6]. Collapse is explained as phenomenological. [7]

Transactional interpretation[editar | editar código-fonte]

The transactional interpretation of quantum mechanics (TIQM) by John G. Cramer [13] is an interpretation of quantum mechanics inspired by the contribution Richard Feynman made to Quantum Electrodynamics. It describes quantum interactions in terms of a standing wave formed by retarded (forward-in-time) and advanced (backward-in-time) waves. The author argues that it avoids the philosophical problems with the Copenhagen interpretation and the role of the observer, and resolves various quantum paradoxes.

Relational quantum mechanics[editar | editar código-fonte]